Imagine constructing a grand railway network that connects cities across a continent. Every train must arrive on time, every junction must function perfectly, and every cargo container must reach its destination intact. Data integration governance works much the same way — it’s the framework that ensures all information flows between systems seamlessly, securely, and consistently. Without it, even the most powerful analytics tools or business intelligence systems would crumble under the weight of conflicting, duplicate, or incomplete data.

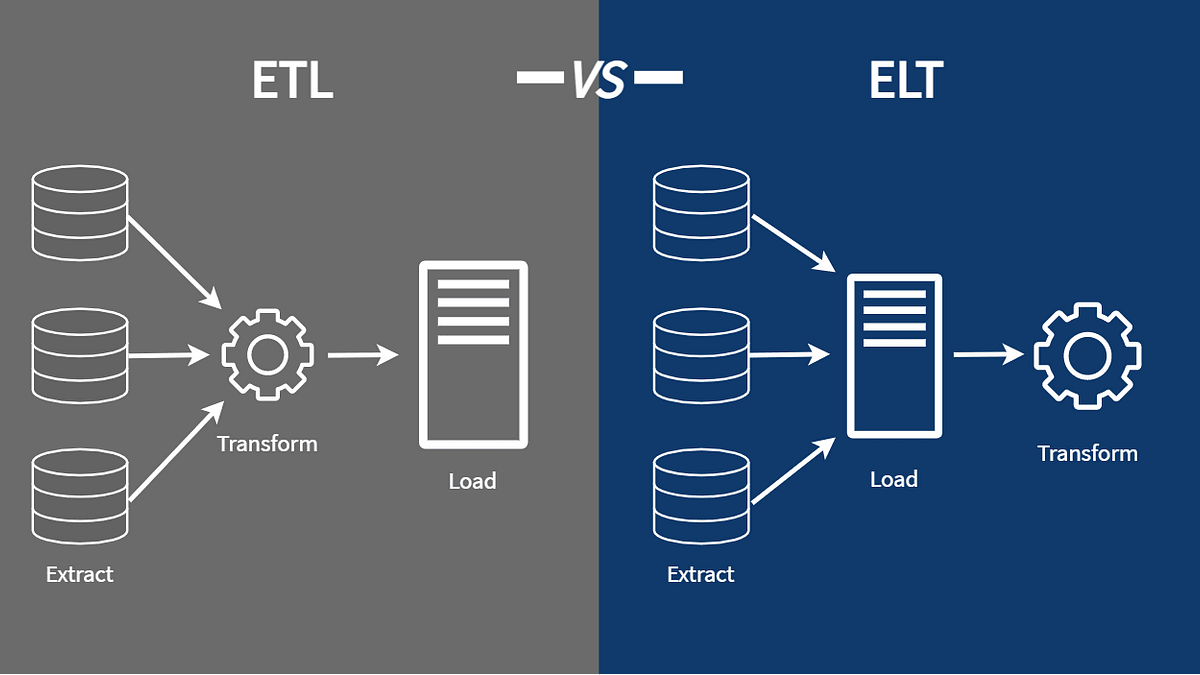

Data integration governance isn’t just about technology — it’s about coordination, discipline, and clarity. It’s about establishing a culture of precision in the ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes that form the backbone of modern analytics infrastructure.

The Symphony of Data Flow: Where Governance Begins

Think of ETL and ELT pipelines as orchestras, where every instrument must play its part in harmony. Governance, in this metaphor, is the conductor — guiding rhythm, tempo, and synchronisation. It ensures that data extracted from different sources, such as CRM systems, ERP platforms, and cloud applications, is transformed and loaded consistently across environments.

Yet, the problem most organisations face isn’t the absence of ETL tools; it’s the absence of uniformity. When each team defines its own rules for naming conventions, data formats, or error handling, chaos takes hold. A robust governance framework introduces standardisation — setting protocols for data lineage, metadata management, and access permissions. It also defines the acceptable thresholds for data quality, so errors are identified before they infect downstream systems.

For professionals seeking to master this intricate ecosystem, structured Data Analysis courses in Pune often introduce not just the tools but also the governance mindset needed to build systems that can scale responsibly.

Building the Foundations: Standards, Policies, and Ownership

A good governance strategy is rooted in strong foundations — clear policies, roles, and accountability. Think of these as the building codes of your data architecture. Just as engineers adhere to safety regulations when constructing skyscrapers, data engineers must follow predefined standards when building data pipelines.

Governance begins with defining who owns what. Data stewards, architects, and analysts each have distinct responsibilities. Stewards ensure data quality; architects enforce integration design principles; analysts validate that the output supports decision-making goals. Together, they form a chain of accountability that prevents bottlenecks and finger-pointing.

Moreover, governance policies must extend across the full ETL/ELT lifecycle. From extraction to transformation, every step should have automated validation checks — such as schema comparisons, anomaly detection, and audit trails. These not only ensure compliance but also provide transparency, helping stakeholders trace the journey of data from source to dashboard.

The Human Element: Collaboration Between Teams

In many enterprises, silos remain the biggest obstacle to effective governance. Data integration involves multiple disciplines — engineering, analytics, business operations, and compliance. Without communication between these teams, even well-designed systems can falter.

Imagine a relay race where runners don’t coordinate their handoffs — the baton (in this case, data) drops, and momentum is lost. Governance bridges these gaps by creating common languages: shared glossaries, unified taxonomies, and documentation repositories. It encourages knowledge-sharing and transparency, transforming data management from a back-office chore into a shared strategic asset.

This collaborative approach is also emphasised in Data Analysis courses in Pune, where professionals learn how cross-functional communication strengthens governance frameworks. They explore not just the technical side of ETL processes but also how cultural alignment drives long-term consistency.

Automation and Control: Technology as the Enabler

As data ecosystems grow more complex, manual governance quickly becomes unsustainable. Automation emerges as the enabler — applying controls that enforce standards without slowing innovation.

Modern data integration platforms now include policy-driven workflows that automatically validate transformations, check for compliance, and log every activity for traceability. For instance, if a data field doesn’t meet its defined threshold, the system can quarantine it, notify the steward, and prevent contamination downstream.

Metadata management tools complement this by providing visibility into data lineage — detailing where data originated, how it was transformed, and where it’s stored. This transparency isn’t just a best practice; it’s essential for audits, data privacy compliance, and trust. When governance is automated, teams can innovate faster, secure in the knowledge that every pipeline adheres to established standards.

Evolving Governance: From Static Rules to Living Frameworks

Data integration governance is not a one-time project but a living framework that evolves with the organisation. New data sources, regulatory changes, and shifts in business priorities continuously reshape what “good governance” looks like.

A mature governance programme evolves iteratively — incorporating feedback loops, updating standards, and leveraging analytics to monitor its own performance. This self-awareness is crucial: governance must adapt without becoming a bottleneck.

The future of governance lies in its ability to blend flexibility with control — using AI to detect anomalies, predictive analytics to anticipate integration risks, and intelligent cataloguing to maintain a single version of truth. The result is not just consistency, but confidence — a trust that every data-driven decision stands on reliable, verifiable foundations.

Conclusion

Data integration governance may not sparkle like the latest AI or analytics technology, but it provides the invisible scaffolding that supports them all. It ensures every data point, every report, and every model rests on a foundation of accuracy and integrity.

In the end, governance isn’t merely about enforcing rules — it’s about nurturing trust. It’s about designing an ecosystem where information flows freely yet predictably, like a well-engineered river system that sustains life along its course. Organisations that invest in strong ETL/ELT governance frameworks don’t just optimise workflows — they future-proof their entire analytics journey, ensuring that insight never becomes chaos.